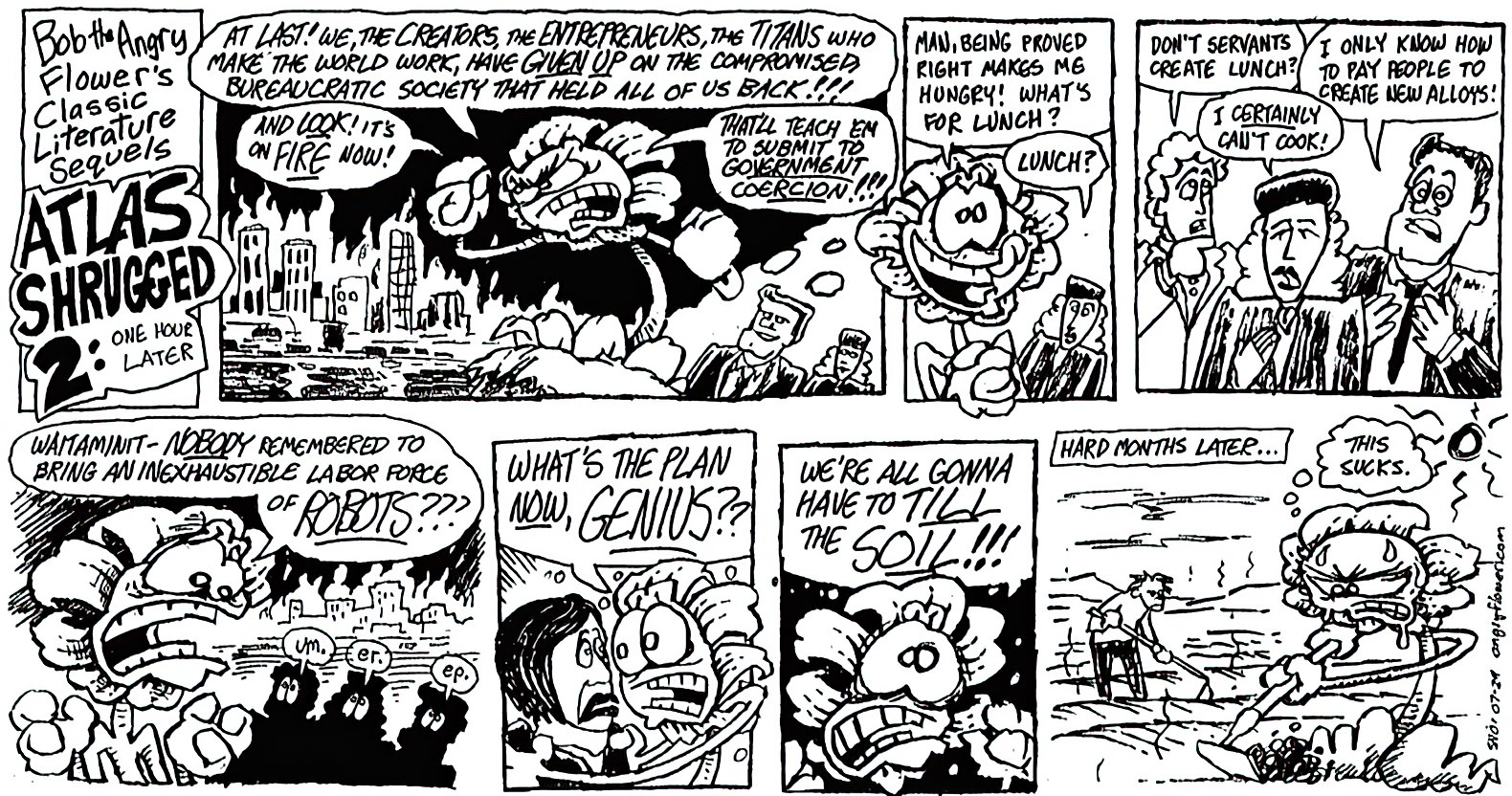

My favorite Bob the Angry Flower of all time.

The first law of robotics is: we don’t talk about robot wars.

I can guess the second one!

I think AI experts would probably prefer to be ruled by a large language model than by a general AI that adheres to Asimov’s laws.

With large language models it will basically be a technocratie of prompt hackers, which are at least humans and thus have a stake in Humanity.

LLMs spit out language that can be used as a prompt… no need for the middleman.

The whole point of asimov’s laws of robotics was things can go wrong even if a system adhered to them perfectly. And current AI attempts doesn’t even have that.

I honestly ponder if an LLM trained on every human on earth’s input once a month about their opinions on the world and what should be done to fix it would have a “normalized trend” in that regard.

LLMBOT 9000 2024!

There are more dumb people than smart people so a “normalized trend” would be a pretty bad idea.

Most people, regardless of personal beliefs, are highly susceptible to populist rhetoric, and generally you want an AI governance bot to make the right choices, not the popular choices.

Yeah it would be mostly “China stand up!” and “Messi for world leader”.

There are more dumb people than smart people.

Since “dumb” and “smart” are both defined by the median between each other, there logically follows that there are always about as much “dumb” as “smart” people.

It really doesn’t, because there are very few smart people and shitloads of stupid people. “Average” intelligence levels are quite low, and this is why.

Didn’t know this comic was still around.

deleted by creator

Love BTAF

Terrible. 2/10. Would not read again.